Speaker: Dr Zi Wang, Google DeepMind

Date: Sep 6, 2024

Time: 9:00AM – 10:30AM SGT

Please register for the talk here.

Abstract:

Bayesian Optimization (BO) is a powerful approach for optimizing expensive black-box functions. However, its success depends heavily on expert-specified Gaussian Process (GP) priors, which can be difficult to construct in practice. This talk explores the use of pre-training to specify these functional priors in two settings: 1) homogeneous: the “training” functions share the same domain as the “test” function (black-box function to be optimized), and 2) heterogeneous: the domains of all training and test functions can be different. In the homogeneous setting, we introduce HyperBO, which pre-trains a GP and uses it for optimizing the test function. This approach leads to bounded posterior predictions and near-zero regrets without requiring the knowledge of the ground truth GP prior. For the heterogeneous case, we introduce a method for model pre-training on heterogeneous domains (MPHD). MPHD enjoys the benefits of HyperBO and further enhances it with domain adaptability. Using a neural net pre-trained in existing domains, MPHD generates customized hierarchical GP specifications based on domain descriptions. We discuss both the theoretical and practical advantages, and demonstrate their superior performance on a wide range of real-world tuning tasks.

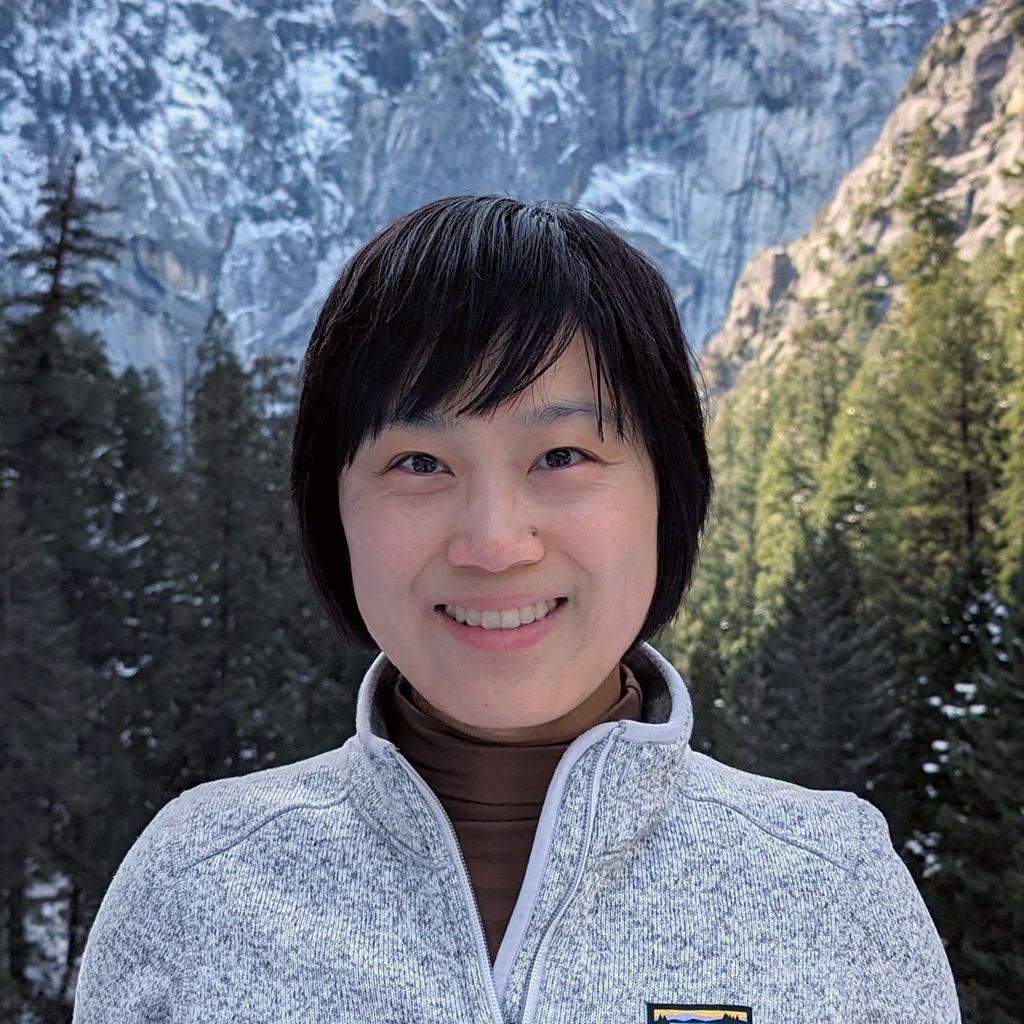

Biography:

Zi Wang is a senior research scientist at Google DeepMind. She works on intelligent decision making under uncertainty, with a focus on understanding and improving the alignment between human judgements and Bayesian beliefs in AI systems. Zi obtained her Ph.D. in computer science from MIT in 2020 and was a lecturer at Harvard University in 2022.