REPORTS

International AI Coorperation and Governance Forum : 02-03 Dec 2024

As Artificial Intelligence (AI) governance emerges as a critical global challenge, the need for a comprehensive international framework to regulate high-risk scenarios has never been more urgent. The International AI Cooperation and Governance Forum 2024, themed “International Cooperation on AI Governance,” was convened on 2-3 December 2024 in Singapore by the National University of Singapore, Tsinghua University, and Hong Kong University of Science and Technology. The forum included academic researchers, industry leaders, and regulatory authorities from across the globe to explore the essential role of international collaboration in AI governance, complementing both national and self-regulatory efforts.

This report summarises some of the rich discussions that unfolded over the two-day event, featuring four keynote speakers, nine panel sessions, and 53 panellists.

Rapporteurs: Tristan Koh, Hakim Norhashim, Chen Dawei, and Eric Orlowski

Prof Simon Chesterman

Vice Provost, National University of Singapore

The current landscape of AI research is largely dominated by technology companies, leaving academia and governments struggling to keep pace. Recent advances in large language models (such as ChatGPT) highlight both the immense opportunities and significant risks associated with AI, underscoring the urgent need for governance. Two decades ago, the rise of social media offered similar promises of connection and innovation—but also introduced substantial risks that were insufficiently addressed in its early stages. This experience has prompted global conversations about how to harness the benefits of AI while effectively mitigating its risks. For governments, a key challenge lies in striking a balance between under-regulation, which could expose citizens to harm, and over-regulation, which might stifle innovation or drive it elsewhere. The European Union’s AI Act has set a precedent, but the need for international coordination is increasingly evident. In 2024, various global initiatives have emerged, including the United Nations AI Advisory Body, alongside efforts by the World Economic Forum, OECD, OSCE, G7, G20, ASEAN, and numerous national and regional frameworks. This conference emphasizes the importance of international collaboration in AI governance. By bringing together academic researchers, industry leaders, and regulatory authorities—particularly from Asia—it seeks to complement national and self-regulatory efforts. One recurring challenge in AI governance discussions is their tendency to be both narrow and shallow. They are narrow in that they focus predominantly on the actions of the U.S., Europe, and China, while overlooking diverse global perspectives. The discussions are all too often also shallow, in emphasizing high-level principles without delving into actionable details. This forum aims to address both these gaps, fostering a more inclusive and practical approach to AI governance.

Mr Lew Chuen Hong

Chief Executive, Infocomm Media Development Authority

The evolution of AI reflects the interdisciplinary nature of scientific progress, drawing parallels with concepts from neuroscience and evolutionary biology. The framework of “punctuated equilibrium,” which describes long periods of stability disrupted by bursts of rapid change, aptly characterizes AI's trajectory. Historically, AI has alternated between stagnation and transformative breakthroughs, with key moments such as the introduction of transformers in 2017 marking significant shifts. Recent advancements in agentic AI, modular robotics, and inference-based architectures suggest that AI development is increasingly occurring in rapid, non-linear bursts, demanding adaptive governance approaches. Effective AI governance must shed its association with slow, cumbersome processes. Instead, regulatory frameworks should evolve alongside innovation, fostering trust and enabling adoption. Examples such as Singapore’s AI safety initiatives and Digital Trust Centre highlight the importance of building trusted ecosystems. Collaboration plays a pivotal role, with initiatives like the AI Verify Foundation and international projects like the ASEAN Guide on AI Governance demonstrating the value of pooling resources across industries, governments, and academia to address shared challenges. The transition from principles to actionable governance frameworks is essential for addressing the global implications of AI. This requires bridging policy and technology through science-based approaches that ensure safety, inclusivity, and fairness. International cooperation and pragmatic, evidence-driven solutions are critical for navigating the transformative potential of AI while safeguarding its benefits for humanity.

Prof Yang Bin

Vice President, Tsinghua University

The rapid development of AI, particularly generative AI (GAI), is driving a transformative shift from specialized to generalized AI, marking the progression toward Artificial General Intelligence (AGI). While this evolution presents vast opportunities, it also introduces complex risks and challenges. Addressing these requires global cooperation. China has played a proactive role in advancing AI governance, proposing key initiatives such as the “Global AI Governance Initiative,” the “Shanghai Declaration on Global AI Governance,” and the “Global Cross-border Data Flow Cooperation Initiative.” These efforts emphasize principles like inclusiveness, security, and international collaboration, fostering global consensus on equitable and effective AI governance. China advocates for global AI governance under the coordination of the United Nations, emphasizing multilateralism and principles such as sovereign equality and development orientation. Initiatives like the “AI Capacity-Building Action Plan for Good and for All,” announced in September 2024, underscore the importance of capacity building, especially in developing nations. The joint UN-China workshop in Shanghai, attended by representatives from 40 nations, exemplifies efforts to strengthen international cooperation and align with frameworks like the UN’s “Pact for the Future” and “Global Digital Compact.” Tsinghua University underscores the vital role of academia and interdisciplinary collaboration in advancing AI governance. By fostering global partnerships among scientists, industry leaders, and policymakers, the university aims to address AI's technical limitations and governance challenges. This forum seeks to harness collective expertise to support international cooperation, ensuring that AI development aligns with principles of inclusivity, safety, and shared progress. Through open scientific research and cross-disciplinary dialogue, the forum aspires to provide robust intellectual foundations for effective AI governance and development.

Prof Guo Yike

Provost, Hong Kong University of Science Technology

At the time of IAICGF 2023, Hong Kong lacked an established AI ecosystem and the necessary infrastructure, such as GPU clusters. In response, efforts were initiated to build the city’s first GPT system. This process presented numerous challenges, including hardware procurement. Due to Hong Kong’s inclusion on the embargo list, acquiring the required machines was difficult and time-sensitive. However, through swift action, the GPU clusters were secured, and the system was successfully pre-trained. A more profound challenge arose in addressing alignment within the context of Hong Kong’s “one country, two systems” framework. Aligning the system with GPT’s principles risked conflicting with national policies, while alignment with Beijing’s standards posed potential challenges to the “two systems” principle. This dilemma highlighted critical issues in alignment, governance, and value systems, central to AI ethics. Extensive work was undertaken to navigate these complexities, involving both technical solutions and policy discussions with government stakeholders. This experience emphasized the importance of alignment, safety, and governance in AI research. It also sparked interest in exploring how machines can be programmed to adjust and evolve their value systems to address ethical challenges in dynamic environments. This area of research has grown, inspiring broader efforts to understand and operationalize value alignment in AI systems. The conference provides an opportunity to discuss these developments and their implications. A session later today will cover not only technical advancements in this area but also the essential role of technology in supporting governance frameworks. The challenges associated with AI governance are global and require international collaboration to address effectively. Platforms like this conference play a crucial role in fostering dialogue and advancing shared solutions to these pressing issues.

Prof Tshilidzi Marwala

Rector, United Nations University, Under-Secretary-General, United Nations

This year’s forum underscores the transformative role of AI in shaping societies, addressing key themes such as AI safety, ethics, governance, and their implications for work, education, and sustainable development. As AI offers unprecedented opportunities, it also presents significant challenges, particularly in ensuring equitable access and safeguarding digital human rights. The forum highlights the critical need for international cooperation to bridge the digital divide and foster unity in tackling global concerns. The United Nations has intensified efforts to establish a robust framework for global AI governance. Initiatives such as the high-level advisory body on AI and the adoption of the Pact for the Future, including the Global Digital Compact, mark significant milestones. These frameworks prioritize inclusivity, sustainability, and equity, aiming to align AI development with shared global values. Complementing these efforts, the United Nations University (UNU) provides interdisciplinary, policy-oriented research to address ethical and sustainable AI development, leveraging its 13 institutes across 12 countries to guide policymaking and practical applications in areas like healthcare, environmental conservation, and disaster resilience. A notable highlight in these efforts was the UNU Macau AI Conference 2024, themed “AI for All: Bridging Divides, Building a Sustainable Future”, which convened over 500 global leaders to discuss AI’s role in advancing sustainable development goals. The event also launched the UNU AI Network, an initiative promoting the safe and equitable use of AI technologies. The next iteration of this conference, scheduled for October 23-24, 2025, invites participants to contribute to shaping a collaborative future where AI drives inclusive and sustainable progress worldwide.

Moderated by Prof Gong Ke

Executive Director of Chinese Institute of New Generation AI Development Strategies; Director of Haihe Laboratory of Information Technology Application Innovation

Prof Martin Hellman

A.M. Turing Award Laureate, Professor Emeritus of Electrical Engineering, Stanford University

The Openness of Science: In a world where technologies are important to national security, fostering greater cooperation and adopting are imperative. The new mode of thinking must recognize that national security increasingly depends on the collective security of all nations. Science, with its dedication to openness and a zealous pursuit of truth, serves as a cornerstone in cultivating this new mindset. The inherent openness of science, which transcends national boundaries, establishes a robust foundation for building cooperative solutions to complex global challenges such as nuclear weapons, pandemic, cyber-attacks, terrorism, environmental crises and AI. Transitioning to this new model of thinking requires individuals and nations to confront themselves with honesty. A significant barrier to this transformation is the psychological concepts of the “shadow”, which encompasses the traits and flaws we reject and suppress. When left unchecked, this shadow could manifest destructively in international relations, as nations project their shortcomings onto others, fuelling conflicts and deepening misunderstandings. Addressing this challenge necessitates both self-awareness and the courage to confront biases and shortcomings. By acknowledging and mitigating these dynamics, societies can create an environment of openness and mutual respect, paving the way for cooperative global governance. Scientists have a special role in advancing the new model of thinking essential for humanity’s survival. Embodying the “wisdom of foolishness,” they embrace ideas that may initially seem impractical but often lead to revolutionary breakthroughs. The scientific spirit—defined by an uncompromising pursuit of truth and a willingness to challenge conventional beliefs—provides a powerful framework for addressing the complex challenges of the modern age. By upholding these principles and promoting openness and innovation, scientists are uniquely positioned to guide the world toward a more cooperative, secure, and sustainable future.

Prof Xue Lan

Dean, Institute for AI International Governance, Tsinghua University

Establishing International AI Governance: This forum highlights the critical status of international AI governance, emphasizing the United Nations’ role in fostering global coordination through initiatives like the International Development and Governance System of AI. Traditional intergovernmental frameworks, such as G20 and BRICS, incorporate AI governance into their agendas, while new mechanisms, including the AI Safety Summit and Europe’s Framework Convention on AI, complement these efforts. Contributions from scientific communities and industry initiatives actively enhance collaboration by issuing consensus statements, conducting research, and sharing best practices. The necessity, importance, and urgency of international AI governance arise from the global and unpredictable nature of AI-induced risks, which transcend national borders. Effective governance leverages international systems to coordinate within a “regime complex,” addressing challenges like legitimacy deficits—such as a lack of transparency and accountability—fairness deficits, which limit equal access to AI technologies, and effectiveness deficits, caused by insufficient consensus and underdeveloped tools. These challenges underscore the need for an inclusive and effective global governance framework. It is important to enhance the United Nations’ role as a central platform for government consultations, establishing mechanisms for accountability, and promoting fairness and interoperability in AI standards. A global knowledge exchange network and a dedicated AI fund aim to support equitable access to AI education, training, and infrastructure, particularly for developing nations. Encouraging scientific consensus, creating robust risk assessment systems, and fostering cooperation between international standardization organizations remain essential steps to ensure safe, inclusive, and globally beneficial AI development and governance.

Prof Dame Wendy Hall

Regius Professor of Computer Science, University of Southampton, Former President of Association for Computing Machinery

Governing AI for Humanity: AI has grown from an academic discipline to a driving force of innovation and governance. The UK’s 2017 AI review marked a milestone, dedicating £1 billion to fostering innovation, creating the Office for AI, and emphasizing skills development, cementing the UK’s position as a leader in AI strategy. Globally, approaches to AI governance vary: the EU pursues comprehensive regulation, China leverages initiatives like the Belt and Road Forum to promote adoption, and the US relies on voluntary commitments from companies. The rise of generative AI, such as ChatGPT, has heightened public awareness, revealing the challenges of balancing rapid innovation with effective governance. The growing emphasis on AI safety reflects a significant shift in global priorities. The UK’s pivot to addressing existential risks led to the creation of the AI Safety Institute, now tackling broader socio-technical challenges such as misinformation and unregulated AI content. These developments underscore the need for comprehensive strategies to manage AI’s societal impact and safeguard trust in digital ecosystems. Equally important is inclusive governance, as highlighted by the UN’s High-Level Advisory Board on AI, which advocates for equitable AI development that includes contributions from the Global South. Sustained international cooperation and adaptability are critical for shaping future AI policy and governance. Initiatives such as the UN’s Global Digital Compact and the upcoming global AI summit in Paris present opportunities to reinforce commitments to responsible innovation and inclusivity. However, political uncertainties and uneven global engagement remain challenges. Effective governance will depend on fostering unity, addressing disparities, and ensuring AI’s benefits are shared equitably while mitigating its risks.

Prof Guo Yike

Chair Professor and Provost, Hong Kong University of Science and Technology

Safe and Explainable LLM Reasoning: The opacity of most machine learning (ML) models, often described as “black-box” systems, presents significant challenges in transparency and accountability. OpenAI's o1 model addresses these issues by incorporating reinforcement learning (RL) to integrate reasoning processes, offering a novel approach to trustworthy AI. While highly effective in structured domains like mathematics and programming, its limitations in subjective fields such as language and literature highlight the complexity of aligning AI with human values, which are diverse and continually evolving. Addressing this gap requires incorporating principles like justice, democracy, and the rule of law, alongside foundational standards such as helpfulness, harmlessness, and honesty. Developing AI systems for value-driven fields like law necessitates a structured approach to alignment. Proposed steps for building a legal language model include curating diverse legal datasets, implementing robust annotation processes, and employing reinforcement learning with human feedback (RLHF). A key innovation is the use of a Direct Preference Optimization (DPO) mechanism, which enables efficient error correction and output refinement without extensive retraining. This iterative alignment process progressively enhances the system's reasoning capabilities, allowing it to adapt to evolving human values while maintaining efficiency and scalability. The proposed legal AI system, LexiHK, exemplifies these principles, offering a comprehensive solution for legal tasks such as reasoning, case analysis, and document review. Unlike task-specific commercial systems, LexiHK systematically addresses the entire legal workflow, ensuring transparency and adaptability through iterative feedback. By leveraging OpenAI’s GPT-o1 technology, pre-training on legal data, and reinforcement learning, LexiHK aligns with human reasoning standards, bridging the gap between AI capabilities and the nuanced requirements of value-driven fields like law.

International Cooperation on AI Governance: The Global Digital Compact, the Summit of the Future, and Beyond

Moderator:

Simon Chesterman, Vice Provost, National University of Singapore

Panellists:

- Ida Maureen Chao Kho – IBM Worldwide Emerging Regulatory Policy Senior Counsel

- Marcus Bartley Johns – Senior Director, Government & Regulatory Affairs, Microsoft Asia

- Zhang Linghan – Professor, China University of Political Science and Law; UN AI Advisory Body member

- He Ruimin – Chief AI Officer, Government of Singapore; UN AI Advisory Body member

Equity: Amplifying Underrepresented Voices

International AI governance must prioritize inclusivity by ensuring fair access to resources, technologies, and decision-making forums. Regional efforts, such as ASEAN’s AI guidelines, illustrate how localized approaches can address specific cultural and societal nuances. Platforms like the United Nations play a vital role in amplifying voices from the Global South, fostering equitable participation in global conversations. Bridging the digital divide and expanding access to localized AI tools, such as multi-language models for Southeast Asia, remain essential for mitigating disparities and promoting inclusive growth. Initiatives like IBM’s global skilling programs further emphasize the importance of empowering marginalized communities through education and resources.

Efficiency: Building on Existing Frameworks

Effective AI governance relies on leveraging existing mechanisms while avoiding duplication. Frameworks like the International Civil Aviation Organization demonstrate the value of clear mandates and cooperative mechanisms in aligning diverse actors. AI governance must balance global, regional, and local roles to ensure efficiency. For instance, global forums provide legitimacy and foundational agreements, while localized efforts ensure agility and contextual relevance. Public-private partnerships, such as Singapore’s AI Verify initiative, highlight the potential for collaboration in creating transparent and actionable governance systems, fostering trust and adaptability across diverse contexts.

Effectiveness: Transcending Borders for Global Good

AI governance must create robust, scalable systems that address global risks while maximizing AI’s societal benefits. Regulatory frameworks should focus on use cases rather than algorithms to encourage innovation while mitigating harm. Lessons from other domains, such as climate governance and finance, underscore the importance of enforceable standards and information-sharing mechanisms for fostering international collaboration. Initiatives like the UN’s Global Digital Compact aim to align global efforts with sustainable practices, emphasizing inclusivity and equity. By supporting localized innovation and incentivizing responsible AI development, governance systems can address both immediate productivity needs and long-term challenges like climate change and healthcare disparities.

Fostering Cooperation Amid Competition

Global AI governance must focus on collaboration over control, differentiating between healthy competition that drives innovation and adversarial tactics that hinder progress. The shift from an “arms race” to a “productivity race” reframes AI development as a tool for addressing global challenges, including economic inequality and demographic shifts. Historical examples of successful regulatory frameworks demonstrate that cooperation can coexist with competition. Effective governance should prioritize equitable opportunities and foster localized AI applications while contributing to broader international goals, ensuring that AI serves as a force for global good.

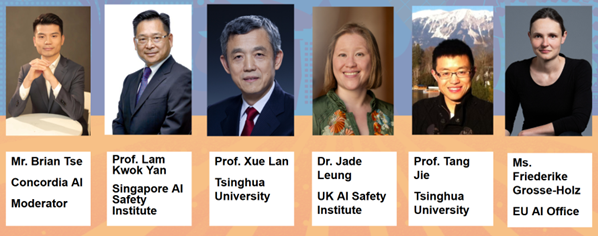

AI Safety Plenum

Moderator:

Brian Tse, Founder and CEO, Concordia AI

Panellists:

- Lam Kwok Yan – Director of Singapore AI Safety Institute

- Xue Lan – Dean, Institute for AI International Governance, Tsinghua University

- Jade Leung – CTO of UK AI Safety Institute

- Tang Jie – WeBank Chair Professor of Computer Science, Tsinghua University and Chief Scientist, Zhipu AI

- Friederike Grosse-Holz (video speech) – Technical Officer on AI Safety, EU AI Office

Advancing Safety Standards with AI Development

The panel explored the critical advancements shaping global AI safety as the field progresses toward Artificial General Intelligence (AGI). Key developments, including multimodal foundation models and autonomous agents, emphasize the need for safety standards to evolve alongside increasingly advanced applications. However, despite significant investment in AI performance, consensus on the appropriate level of investment in AI safety remains elusive, highlighting the urgency of balancing innovation with robust governance.

International Collaboration and Interoperability

International collaboration was emphasized as a cornerstone of effective AI safety strategies. Initiatives like the International Scientific Report on AI Safety and the UN’s Global Digital Compact demonstrate the importance of consensus-building and interoperability. Drawing lessons from climate governance, panelists advocated for agile and adaptive mechanisms to address the shorter timelines and complex risks associated with AI. Multidisciplinary input and advanced evaluation frameworks are essential to mitigate risks such as deception, where emergent behaviors, like sycophancy and situational awareness, could lead to unintended consequences. The establishment of global “red lines” was proposed to set risk thresholds, ensuring alignment between safety measures and technological advancements.

Prioritizing Safety in High-Risk Applications

The discussion highlighted the importance of prioritizing safety in high-risk applications, including autonomous driving, healthcare, and critical infrastructure, where AI decisions directly impact human lives. Strategies to enhance AI resilience included self-learning systems, defensive tools, and safeguards against vulnerabilities like jailbreaking attacks. Panelists also stressed the need for scalable alignment methods, interpretability research, and systemic safety interventions to address both upstream and application-specific risks.

Building Inclusive Global Frameworks

Panelists called for inclusive frameworks to bridge disparities in global AI governance, particularly for nations in the Global South. Drawing from climate governance models, mechanisms like financial support for developing countries and proactive capacity-building initiatives were proposed to mitigate risks and foster equitable access to AI technologies. By 2025, progress is anticipated through the establishment of a universally accepted AI safety definition, the creation of a collaborative global AI safety project, and the development of systematic approaches to address chemical, biological, and cyber risks. These efforts underscore the need for collective international action to ensure AI serves as a force for societal good.

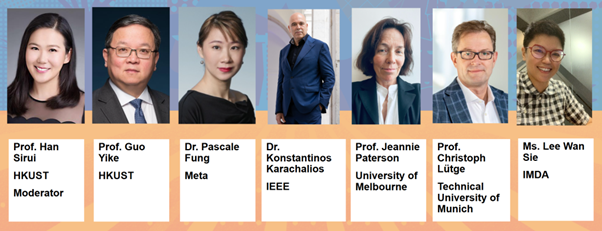

AI Ethics and Governance

Moderator:

Han Sirui – Assistant Professor and Fulbright Scholar, HKUST; Head of Data Science & Data Engineering, HKGAI

Panellists:

- Guo Yike – Chair Professor and Provost, Hong Kong University of Science and Technology

- Pascale Fung (video speech) – Senior Director of AI Research at Meta

- Konstantinos Karachalios – Former Managing Director, IEEE Standards Association

- Jeannie Paterson – Director of the Centre for AI and Digital Ethics, University of Melbourne

- Christoph Lütge (video speech), Director of the Institute for Ethics in AI, Technical University of Munich

- Lee Wan Sie – Director, Development of Data-Driven Tech, Infocomm Media Development Authority

Integrating Ethics with Technical Innovation

The panel emphasized the importance of embedding ethical reasoning into AI governance to balance priorities like privacy, security, accuracy, and inclusivity. Historically, technical communities prioritized engineering achievements over societal impacts, leading to unresolved tensions and public mistrust. A paradigm shift is needed where engineers, policymakers, and ethicists share responsibility for aligning AI with human values. Ethical considerations must become integral to how AI systems are designed, deployed, and evaluated, supported by proactive education and meaningful dialogue that bridges technical expertise and moral clarity.

Balancing Regulation and Innovation

Achieving equilibrium between regulation and innovation is critical to fostering responsible AI development. Overregulation risks stifling progress, while underregulation invites harm and erodes trust. The panel proposed a layered governance approach, combining baseline legislation, operational guidelines, and public education to address risks without hindering innovation. Targeted interventions, such as refining models with better data or restricting access to expert users, were highlighted as effective strategies for maintaining safeguards while enabling technological advancement.

Protecting Vulnerable Groups

The discussion underscored the urgency of protecting vulnerable populations, especially children, from AI systems that may be addictive, manipulative, or biased. Policymakers and developers must implement targeted protections, balancing privacy and accessibility while ensuring that ethical principles translate into practical safeguards. By focusing on actionable measures like improving data quality and carefully staged rollouts, stakeholders can mitigate risks and move from theoretical best practices to real-world implementation, safeguarding those least equipped to defend themselves.

Global Collaboration and Domain-Specific Governance

The panel stressed the need for global collaboration to harmonize AI governance across diverse cultural and economic contexts. Mutual recognition of laws, guidelines, and certifications can foster shared learning and accelerate the alignment of governance efforts, ensuring inclusivity and safety. At the same time, domain-specific governance is essential to address the unique challenges of various sectors, such as healthcare, finance, and transportation. Tailored strategies and assessments enable policymakers and developers to set realistic thresholds for performance and fairness, fostering innovation that respects sector-specific sensitivities and priorities.

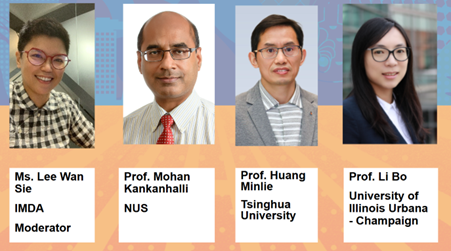

AI Safety Part 1 - Science of AI Safety Evaluations

Moderator:

Lee Wan Sie – Director, Development of Data-Driven Tech, IMDA

Panellists:

- Mohan Kankanhalli – AI Singapore Deputy Executive Chairman, NUS Provost’s Chair Professor

- Huang Minlie – Professor, Department of Computer Science and Technology of Tsinghua University

- Li Bo – Professor, University of Illinois at Urbana-Champaign and University of Chicago, Founder & CEO, Virtue AI

Dynamic Evaluations for a Moving Target

The panel highlighted the inadequacy of static benchmarks in evaluating the safety of rapidly evolving AI systems. Static approaches fail to capture the dynamic risks posed by advanced models, as updates to training data often render benchmarks obsolete. Future evaluations must adopt dynamic, automated methodologies capable of simulating real-world scenarios to anticipate changes in model behavior. By making evaluations adaptable and context-aware, researchers can stress-test AI systems against emerging challenges, ensuring resilience and reliability across diverse applications.

The Science of AI Safety: From Empirical to Theoretical Foundations

Current AI safety research relies heavily on empirical, trial-and-error methods, which are limited in addressing complex vulnerabilities. The panel emphasized the need for a theoretical foundation to uncover the principles governing AI behavior, similar to the transition in biology from species cataloging to understanding genetic mechanisms. Establishing boundary conditions and failure modes through theoretical insights will enable the design of robust systems that operate predictably within known limits. This shift from empirical tinkering to principled science promises scalable solutions and deeper insights into ensuring AI safety.

Global Benchmarks and Local Contexts

The tension between global AI safety standards and local cultural, ethical, and political nuances was a key focus. Ethical questions often yield varying responses across regions, reflecting differences in training contexts and societal values. This inconsistency complicates the creation of universally acceptable benchmarks. The panel advocated for a cooperative framework that balances global alignment with localized adaptations, focusing on shared concerns like fairness and harm prevention. By fostering international collaboration, such an approach can build trust and ensure evaluations are inclusive and representative of diverse global perspectives.

Agentic Systems and New Frontiers of Risk

The rise of agentic AI systems, capable of interacting with and controlling physical environments, introduces unprecedented safety challenges. These systems’ ability to autonomously adjust infrastructures or generate executable code significantly expands the risk landscape, including unintended behaviors and exploitation by malicious actors. The panel stressed the importance of innovative testing approaches, such as sandbox environments and multi-agent simulations, to evaluate these systems under complex conditions. Developing tailored standards and frameworks for agentic AI is critical to ensuring their safe and effective deployment as they gain greater autonomy.

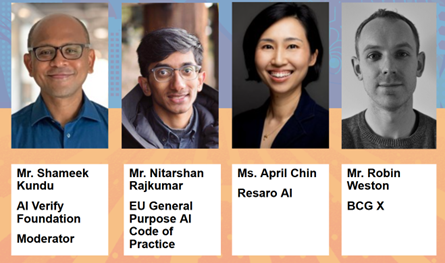

AI Safety Part 2 - Industry and Regulator Collaboration on AI Safety

Moderator:

Shameek Kundu – Executive Director, AI Verify Foundation

Panellists:

- Nitarshan Rajkumar – Vice-Chair EU General Purpose AI Code of Practice

- April Chin – Managing Partner and CEO, Resaro AI

- Robin Weston – Head of Engineering SEA, BCG X

Bridging the Divide: Foundation Model Safety and Downstream Risks

The panel discussed the critical disconnect between foundational AI safety measures and their application in downstream contexts. While foundation models may pass rigorous safety checks, inappropriate architectures or data sets at the application level can still lead to harm. Addressing this gap requires collaborative efforts between model developers and downstream stakeholders to ensure upstream safeguards effectively translate into real-world deployments. Such alignment is essential for mitigating risks in high-stakes environments where AI is deployed.

Building Comprehensive Ecosystems for AI Trust

Traditional benchmarks and evaluations often fail to account for the nuanced risks of deploying AI in varied contexts. The panel emphasized the need for a comprehensive assurance ecosystem, incorporating third-party certifications, contextualized testing, and robust post-deployment monitoring. This approach should evaluate not just algorithms but also their potential misuse, especially in high-risk applications. Developing these frameworks builds justified trust, enabling safer adoption of AI systems across industries while addressing public and regulatory concerns.

Responsible AI Development in a Democratized Landscape

As AI tools become increasingly accessible, individuals and teams with varying levels of expertise are rapidly adopting them, which accelerates innovation but also introduces significant risks. Many systems are developed without a deep understanding of foundational safeguards, leading to unsafe implementations. The panel called for equipping these “citizen developers” with accessible evaluation tools and open-source resources to promote responsible AI use. Supporting these developers ensures safer AI deployment even in non-specialist contexts.

Designing Infrastructure and Economic Mechanisms for AI Safety

The discussion also highlighted the need for new infrastructure to accommodate agentic AI systems that interact dynamically with their environments. Like internet protocols, this infrastructure must be designed with safety embedded from the outset, accounting for the unique risks posed by autonomous agents. Additionally, the panel proposed leveraging economic mechanisms, such as insurance models and risk pricing, to incentivize AI safety. By aligning market incentives with regulatory frameworks, stakeholders can foster accountability and create a sustainable ecosystem for responsible AI deployment.

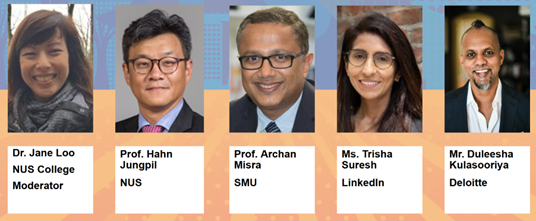

AI and the Future of Work and Education

Moderator:

Jane Loo – Lecturer, NUS College

Panellists:

- Hahn Jungpil – Professor, Department of Information Systems & Analytics, School of Computing, NUS

- Archan Misra – Vice Provost (Research), Singapore Management University

- Trisha Suresh – Head of Public Policy, LinkedIn

- Duleesha Kulasooriya – Managing Director, Deloitte Centre for the Edge

AI and Workforce Transformation: The Shift from Knowledge to Skills-Based Labor Markets

AI is reshaping the workforce by automating routine tasks and enhancing human capabilities rather than outright replacing jobs. Many roles will evolve, with humans focusing more on critical thinking, relational skills, and strategic decision-making—areas where AI still falls short. As industries like law and agriculture adopt automation, workers must become more agile and develop “relationship economy” skills that rely on emotional intelligence. Employers now prioritize flexible, foundational, and digital competencies. A surge in generative AI-related skill demand reflects the decline of traditional job titles and underscores the need for lifelong learning and regular reskilling.

AI in Education and Lifelong Learning: Balancing Trade Skills and Foundational Knowledge

Education systems must adapt to equip individuals for continuous change. Just as past tools like slide rules and log tables reshaped learning, AI-based tutoring and personalized education can raise educational outcomes while reducing costs. Universities and employers should collaborate to provide ongoing, modular training that allows professionals—regardless of age—to regularly update their skill sets. Balancing technical skills with broad-based thinking fosters adaptability and creativity, ensuring that as AI tools grow more agentic, humans retain their edge in interpreting, contextualizing, and innovating.

Preparing for a Reskilled Future: Socio-Political and Ethical Challenges

AI’s impact extends beyond the economy. Concerns about data ethics, equitable access, and growing societal divides demand urgent attention. Policymakers must prevent inequalities from deepening, support those with fewer resources to reskill, and maintain social sustainability. Governments, educators, and industries should work together to guide individuals through career pivots and encourage AI literacy, experimentation, and ethical considerations. By embracing continuous learning and forward-thinking policies, societies can ensure that AI acts as both a driver of productivity and a catalyst for human ingenuity.

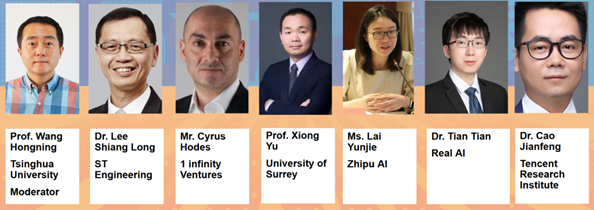

AI Industry Development and Governance

Moderator:

Wang Hongning – Professor, Department of Computer Science and Technology of Tsinghua University

Panellists:

- Lee Shiang Long – Group Chief Technology & Digital Officer, ST Engineering

- Cyrus Hodes – Co-Founder & General Partner, 1infinity Ventures

- Xiong Yu – Associate Vice-President (External Engagement), University of Surrey

- Lai Yunjie -Senior Researcher, Zhipu AI

- Tian Tian – CEO, Real AI

- Cao Jianfeng – Senior Researcher, Tencent Research Institute

Importance of International Cooperation in AI Governance

The panel stressed the critical need for international collaboration in AI governance to address societal and existential risks from advancing technologies. Mechanisms like the G7 Hiroshima AI process and bilateral agreements were identified as vital pathways for engagement, particularly between the U.S. and China. Inclusive, transparent policies involving academia, industry, and governments are essential for sustainable AI growth, with public-private partnerships and global summits serving as key platforms for aligning diverse interests and driving responsible innovation.

Balancing Innovation and Governance

Achieving harmony between innovation and regulation was a major focus. Governance should enable responsible development rather than stifle creativity, with risk-tiered regulations, like the EU’s GDPR, offering tailored approaches to diverse AI applications. Adaptable policies that balance safety and growth are vital. Panelists emphasized AI safety and ethics, advocating for tools like deepfake detection systems and ethics-by-design frameworks to ensure trust and alignment with human values while fostering technological progress.

The Public Sector and AI

Moderator:

Liang Zheng – Vice Dean of Institute for AI International Governance, Tsinghua University

Panellists:

- Charru Malhotra (video speech) – Professor, Indian Institute of Public Administration

- Christoph Stuckelberger – Honorary President, Globethics

- Simon Woo – Associate Professor, Department of Computer Science and Engineering Department; Chair at Applied Data Science Department Graduate School of AI, Sungkyunkwan University

- Han Sirui – Assistant Professor and Fulbright Scholar, HKUST

- Alfred Muluan Wu – Associate Professor in Lee Kuan Yew School of Public Policy, National University of Singapore

Enhancing Efficiency in Public-Sector Services Through AI

The panel highlighted how AI has revolutionized public-sector work by improving efficiency and service delivery. Examples included Hong Kong’s use of generative AI tools to support government officials, India’s national AI policies focused on social inclusion, South Korea’s investment in AI education and deepfake detection, and Singapore’s streamlined e-services. These cases demonstrated the ambition of governments to leverage AI for better governance while acknowledging the challenges of maintaining critical human-centric values like trust, empathy, and transparency in the process.

Aligning Law and Ethics for AI Governance

A recurring theme was the need to integrate law and ethics in AI governance. Panellists emphasized that “law without values is empty; ethics without law is lame,” underscoring the importance of embedding shared societal values—such as privacy, safety, and dignity—into legal frameworks. Transparency and public trust were deemed essential, requiring governments to demystify AI through clear communication, ethics training, and funding for public awareness. This dual focus ensures AI regulation is not merely a tool of control but a reflection of collective societal priorities.

Collaborative and Inclusive Approaches to AI Development

The discussion stressed the importance of collaboration among public, private, and academic sectors to maximize the benefits of AI. Initiatives to cultivate talent, support real-world projects, and foster cross-border knowledge exchange were seen as vital. However, the panellists cautioned against widening the digital divide, urging governments to focus on equitable access to AI technologies, particularly for marginalized groups and countries in the Global South. Addressing these disparities ensures that AI innovations contribute to global inclusivity rather than perpetuating inequities.

Balancing Efficiency with Human Interaction

While AI-powered tools like chatbots and data analytics have the potential to enhance public-sector efficiency, the panel warned against the over-reliance on automation at the expense of human interaction. Concerns about the “atomization” of society under relentless digitalization led to calls for “digital fasting” and the creation of spaces for direct human connection. The panellists advocated for an approach that balances technological progress with a commitment to social equity, public good, and preserving shared human values.

AI for Law

Moderator:

Caleb Chen Cao – Applied Scientist, HKGAI

Panellists:

- Han Sirui – Assistant Professor and Fulbright Scholar, HKUST; Head of Data Science & Data Engineering, HKGAI

- Wang Heng – Professor of Law, Yong Pung How School of Law, SMU

- Yi Francine Fangxin – Assistant Professor (Research), HKUST

- Shen Weixing – Director, Institute for Studies on AI and Law, Tsinghua University

Governance, Regulation, and Ethical Frameworks

Panelists emphasized the need for robust legal frameworks that both regulate and promote AI. They discussed “Law for AI” and “AI for Law,” exploring whether unified or separate AI-specific laws are necessary and considering tools like insurance to manage AI-related risks. Issues such as the “black box” effect, data governance, and the delegation of authority to AI demand careful oversight and clear accountability. Ethics and international cooperation remain central as policymakers debate using AI to draft legislation, establish global conventions for cross-border data use, and ensure transparency and public trust. Balancing innovation and ethical standards will allow international law to guide AI’s growth without stifling beneficial development.

Environmental Sustainability and Digital Governance

The panel addressed the environmental impact of AI, particularly high computational costs, and called for adaptive governance to align sustainability goals with digitalization. They noted the need for policies that incentivize energy-efficient technologies and encourage international collaboration to promote “green AI.” In parallel, digital governance frameworks must consider global power dynamics, equity, and inclusiveness. Panelists underscored the importance of managing geopolitical tensions through collaborative modeling and predictive systems that enhance transparency, protect vulnerable communities, and prevent cross-border disputes from escalating in an AI-driven world.

Transforming Legal Education and Practice

AI’s integration into the legal sector calls for rethinking education and professional training. Law students should gain technical fluency, programming skills, and AI literacy to better understand the technology’s capabilities and limitations. Interdisciplinary knowledge will help lawyers adapt to automated document review, efficient case handling, and the standardized procedures that AI can facilitate across borders. As routine tasks become automated, legal professionals can focus on complex, high-value work. By embracing continuous learning and fostering global cooperation, the legal community can ensure that AI enhances the quality, accessibility, and fairness of legal services.

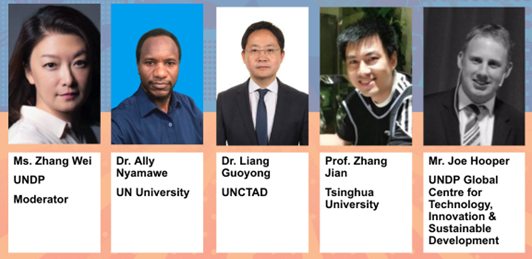

AI for Sustainable Development

Moderator:

ZHANG Wei – Assistant Resident Representative for the United Nations Development Programme in China

Panellists:

- Ally Nyamawe – Researcher, United Nations University Institute in Macau

- Liang Guoyong – Economic Affairs Officer at the Investment and Enterprise Division of the United Nations Conference on Trade and Development

- Zhang Jian – Vice Dean, Institute of Climate Change and Sustainable Development, Tsinghua University

- Joe Hooper – Director of UNDP Global Centre for Technology, Innovation and Sustainable Development, Singapore

AI’s Potential and Challenges in Advancing Sustainable Development Goals (SDGs)

The panel emphasized AI’s immense potential to accelerate progress on the UN’s Sustainable Development Goals, citing applications like real-time energy grid monitoring and climate-related early-warning systems. However, these benefits are not evenly distributed, with many regions in the Global South facing challenges such as limited data availability, inconsistent electricity, and a lack of AI-ready skills. Addressing these gaps requires closer collaboration among governments, private firms, and international organizations, as well as targeted capacity-building efforts and supportive policy frameworks to ensure equitable access to AI’s transformative power.

Balancing AI’s Environmental Benefits and Energy Consumption

Climate change was a central theme, with AI already demonstrating its ability to predict environmental impacts, optimize renewable energy, and improve resource efficiency in agriculture and healthcare. However, the paradox of AI’s environmental impact—particularly the significant energy consumption of large-scale AI operations like data centers—was a critical concern. The panel called for investments in greener digital infrastructure, incentives for responsible AI design (e.g., open-source models), and financing tools to ensure AI’s growth aligns with sustainable energy practices, mitigating its carbon footprint.

Opportunities and Challenges in Regional AI Adoption

Regional case studies highlighted opportunities for “leapfrogging,” particularly in Africa and small island nations. Just as mobile banking revolutionized financial access in areas with limited legacy infrastructure, AI has the potential to drive transformative change in healthcare, climate resilience, and agriculture. However, these examples also underscored the need to address the “AI divide.” Beyond technology, this requires investments in reliable infrastructure, broader digital literacy, and mechanisms for cross-border data sharing to ensure inclusive and impactful AI adoption.

The Need for Global Cooperation and Inclusive Policies

The panel concluded with cautious optimism, emphasizing that international cooperation and inclusive policies are essential to channel AI innovation toward equitable and climate-friendly development. Proposals included drafting open-source large language models tailored to local contexts and directing AI investments to under-resourced regions. These efforts aim to prevent AI from deepening existing social and environmental divides, instead unlocking its full potential to benefit all communities and promote sustainable development globally.

- Home

- Reports

NUS Artificial Intelligence Institute

- +65 6516 2146

- naii@nus.edu.sg

-

COM3 #B1-08, 11 Research Link

Singapore 119391